Generative AI systems present unique security challenges due to their ability to create new data, making them targets for attacks aimed at compromising the system or producing harmful outputs. Effective security measures are vital for maintaining trust in AI-generated content and preventing the propagation of misinformation or malicious content.

This is part of a series of articles about vulnerability management

In this article:

- Generative AI Security Trends and Statistics

- Security Concerns in the Age of Generative AI

- What Is the OWASP LLM Top 10?

- How to Mitigate Generative AI Cyber Security Risks

Generative AI Security Trends and Statistics

The rapid adoption of generative AI (Gen AI) technologies across various industries has impacted business operations and security. According to a McKinsey Global Survey, one-third of respondents indicated their organizations are already using Gen AI tools in at least one business function. This widespread use introduces complex security challenges.

While 40% of these respondents acknowledge an increase in investment towards AI due to advances in Gen AI, less than half believe their organizations are effectively mitigating risks associated with these technologies.

According to Statistica, 46% of business and cybersecurity leaders are concerned that generative AI will result in more advanced adversarial capabilities, while 20% are concerned the technology will lead to data leaks and exposure of sensitive information.

To illustrate the extent of the problem, a study by Menlo Security showed that 55% of inputs to generative AI tools contain sensitive or personally identifiable information (PII), and found a recent increase of 80% in uploads of files to generative AI tools, which raises the risk of private data exposure.

Related content: Read our guide to vulnerability management tools

Security Concerns in the Age of Generative AI

There are several security risks that affect generative AI systems.

Privacy Violations

Generative AI systems often require access to vast amounts of data, including potentially sensitive or personal information, to train their models and generate content. There is a risk that these systems could inadvertently generate outputs that contain or imply private information about individuals, leading to privacy violations.

Deepfakes and Misinformation

The capability of generative AI to produce highly realistic and convincing fake content, known as deepfakes, poses a risk for spreading misinformation and manipulating public opinion. These technologies can create believable images, videos, and audio recordings of individuals saying or doing things they never did, potentially damaging reputations, influencing political processes, and exacerbating social divisions.

Algorithmic Transparency

Transparency in how generative AI systems function is crucial for both security and trust. Algorithmic transparency involves making the processes and decision-making criteria of AI models understandable to users and stakeholders. This transparency helps in identifying potential biases, understanding the source of errors, and ensuring accountability in AI operations. However, achieving algorithmic transparency is challenging. Many AI models, especially deep learning models, operate as “black boxes,” making it difficult to explain how they arrive at specific outputs.

Ethical Use

The ethical use of generative AI involves ensuring that these technologies are developed and deployed in ways that align with societal values and do not cause harm. This includes addressing biases in AI models, which can lead to discriminatory outcomes. Ensuring diversity in training data and regularly auditing AI outputs for bias are key practices for promoting fairness. Ethical use also encompasses the responsibility to avoid over-reliance on AI, ensuring that human oversight remains a part of critical decision-making processes.

AI Compliance Challenges

Compliance with regulatory frameworks and industry standards is a significant challenge for organizations deploying generative AI systems. These systems often process large volumes of personal and sensitive data, necessitating adherence to data protection laws like GDPR and CCPA through robust anonymization and transparency measures. Intellectual property rights present another complex issue, requiring organizations to navigate ownership questions of AI-generated content.

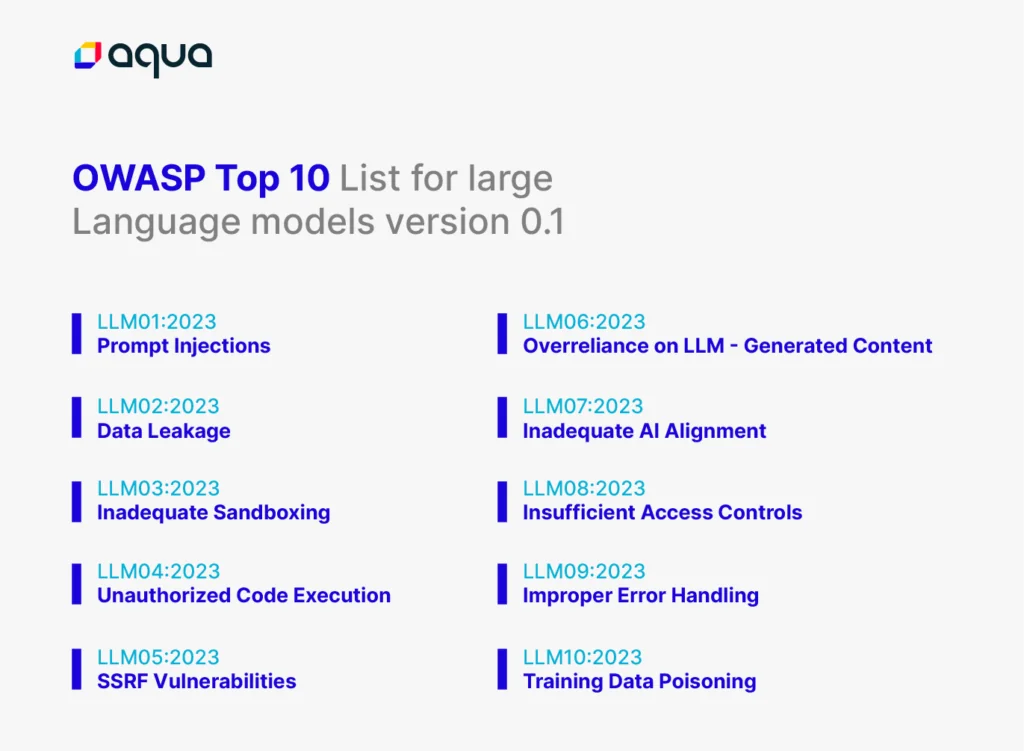

What Is the OWASP LLM Top 10?

The OWASP LLM Top 10 is a list that outlines the most critical security vulnerabilities specific to Large Language Models (LLMs), which have gained mainstream popularity. Compiled by the Open Web Application Security Project (OWASP), this list serves as a resource for developers, security professionals, and organizations involved in deploying LLM applications.

The list aims to raise awareness about common vulnerabilities, offering insight into potential threats and guiding best practices for securing LLM systems. It reflects collective knowledge from nearly 500 international experts, ensuring it addresses the most pressing security challenges in generative AI.

Here are the top 10 LLM security risks according to OWASP:

- Prompt Injection: Attackers craft inputs to manipulate an LLM into executing unintended actions or revealing sensitive information.

- Insecure Output Handling: Trusting LLM outputs without validation can lead to issues like XSS or remote code execution.

- Training Data Poisoning: Maliciously altered training data can bias the model, degrade its performance, or trigger harmful outputs.

- Model Denial of Service: Excessive resource consumption by attackers can degrade service quality or incur high operational costs.

- Supply Chain Vulnerabilities: Issues with training data, libraries, or third-party services can introduce biases or security weaknesses.

- Sensitive Information Disclosure: Inadequately secured LLMs may inadvertently expose personal data or proprietary information.

- Insecure Plugin Design: Poorly designed plugins can lead to unauthorized actions or data leaks when interacting with external systems.

- Excessive Agency: Overly autonomous LLM-based systems might perform unforeseen and potentially harmful actions due to excessive functionality rights.

- Overreliance: Users depending too heavily on LLM outputs without verification may face legal issues or propagate misinformation.

- Model Theft: Unauthorized copying of an LLM can result in competitive disadvantages or misuse of intellectual property.

See the official OWASP resource page for the LLM top 10.

How to Mitigate Generative AI Cyber Security Risks

Here are some of the measures that organizations can take to minimize the security risks associated with using Gen AI.

Data Sanitization

Implement proper data sanitization processes to ensure that sensitive or personally identifiable information is protected. This involves identifying and removing unnecessary or potentially risky data points from training datasets before they are used to train generative AI models. Additional techniques such as differential privacy can be applied to anonymize data while preserving its utility for training purposes.

Secure Model Development and Deployment

Secure coding practices should be followed throughout the development lifecycle of generative AI models to minimize vulnerabilities. This includes conducting thorough security reviews, implementing access controls, and applying encryption techniques to protect data both at rest and in transit. Establish secure deployment pipelines and regularly update models with patches and security fixes to address emerging threats.

Continuous Monitoring and Vulnerability Management

Deploy monitoring mechanisms to detect anomalous behavior or potential security breaches in real time. This includes monitoring data inputs and outputs, model performance metrics, and system logs for suspicious activity. Additionally, there should be vulnerability management processes in place to promptly address and remediate security flaws as they are discovered.

Adversarial Testing and Defense

Conduct rigorous adversarial testing to evaluate the resilience of generative AI systems against potential attacks and exploitation attempts. This involves simulating various threat scenarios and adversarial inputs to identify weaknesses in the system’s defenses and iteratively improve its security posture. Implement defense mechanisms like anomaly detection algorithms and input validation checks to mitigate the impact of adversarial attacks.

Leverage Explainable AI

Incorporating explainable AI techniques can enhance the transparency and interpretability of generative AI systems, enabling stakeholders to understand how these systems make decisions and generate content. This fosters trust in the technology and helps teams identify and mitigate security vulnerabilities or biases that may arise during the model’s operation.

Related content: Read our guide to cyber security posture