Since the second half of 2017, Kubernetes has been gaining momentum in adoption as well as in its ecosystem support. We see more and more enterprises choosing Kubernetes for the orchestration of their cloud native deployments. This is in no small part thanks to the many enterprise-grade features added in versions 1.8 and 1.9, including many security-related constructs that make it easier to manage user authentication and authorization, network segmentation, Master Node access, etc.

Why is securing Kubernetes so important?

Kubernetes is not just another application hosted in your datacenter. It is an application “operating system” for the cloud native era. It controls what gets deployed and where, for applications that may span thousands of nodes, must always be highly available, serve millions of users and handle commercially and personally sensitive data.

A recent reminder of this was the breach at Tesla motors, where an unsecured Kubernetes management console enabled attackers to run crypto-mining containers on its Amazon account. This is not a sophisticated attack by any stretch of the imagination, but it underlines how simple human error and a lack of understanding of the consequences of not following best practices and using unsecured default settings can cause serious harm.

So What’s Different About Securing Kubernetes?

Though it offers a wealth of possibilities, securing Kubernetes is not a push-button process. The variance in the quality of implementations is greater than can be found with older, more road-tested technologies. This is happening due to several factors:

- Kubernetes, as an open source project, aims to provide all the capabilities needed to make it run booth smoothly and securely. However, in order to remain generic and suitable for a wide variety of use-cases, it deliberately stops short of strict implementation models. This is also why there’s such a diverse ecosystem of solutions that enhance Kubernetes management, networking, storage, and security. The downside is that configuring Kubernetes according to best practices is not straightforward. Using upstream Kubernetes is like going to the market to buy ingredients, and then cooking them – as opposed to ordering take-out or going to a restaurant. Not everyone has the skills, the time, or the inclination to do it.

- Which brings us to the second factor – the skills gap. There aren’t many Kubernetes experts out there, and even fewer security experts that know Kubernetes or Kubernetes experts that know security. Anything that can minimize the effort involved and make it easier for organizations to implement best practices is a blessing.

- The third factor is segregation of duties: The people tasked with deploying Kubernetes-based cloud native applications should not be the ones responsible for overseeing security. Even in organizations that embrace DevSecOps, someone must ultimately own the security policy and its enforcement, and that cannot be the same individual whose job it is to deploy applications, and deploy them fast above all else.

We’ve seen and listened to our customers to create a full-featured, Kubernetes-oriented solution that would provide full lifecycle security for Kubernetes deployments, while relying where possible on native upstream Kubernetes capabilities in order to ensure forward compatibility and reduce vendor lock-in. This current release is a significant leap forward for our customers and anyone looking to ensure the security of their K8S environments, and we at Aqua will continue to contribute to the Kubernetes community and leverage its evolving capabilities in the most optimal way.

Let’s take a closer look at the key features of Aqua 3.0

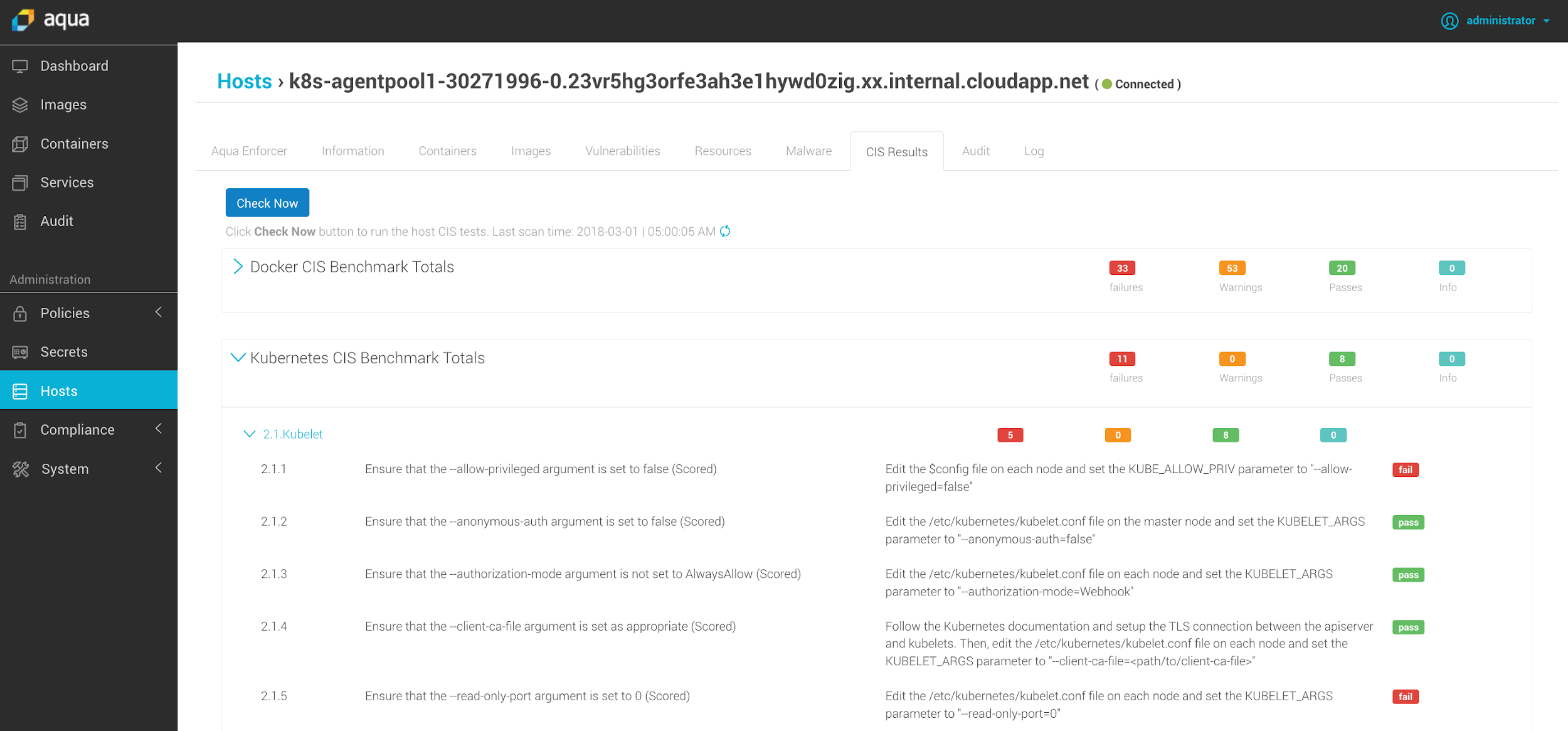

CIS Kubernetes Benchmark

A few months ago we released Kube-Bench, an open source tool written in Go that checks a Kubernetes environments against the long list of tests outlined in the CIS Kubernetes Benchmark. Following the benchmark is highly recommended to ensure basic best practices are followed, including least privileges, strong authentication, transport layer encryption, and so forth.

Now we’ve included these tests as part of the Aqua compliance checks. They provide a detailed report and can be configured to run daily, creating an audit trail of compliance and security posture.

CIS Kubernetes Benchmark results displayed alongside the CIS Docker Benchmark

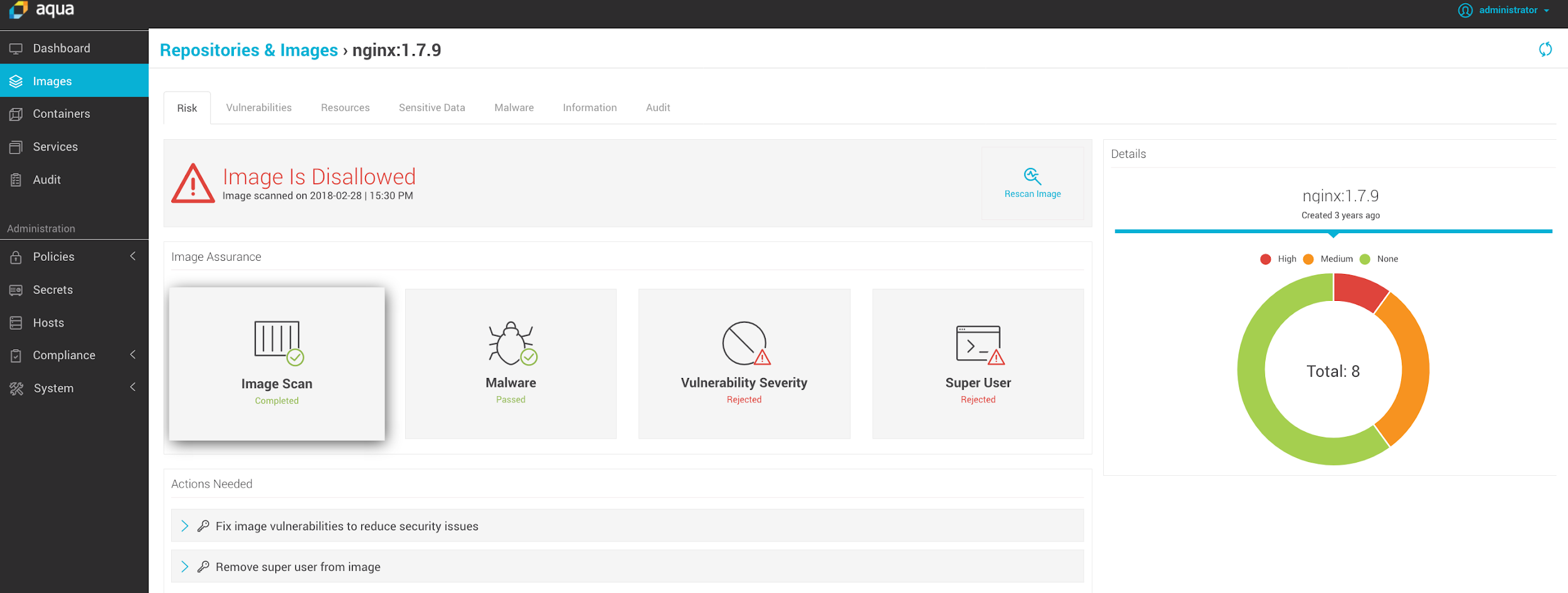

Image Assurance at Scale

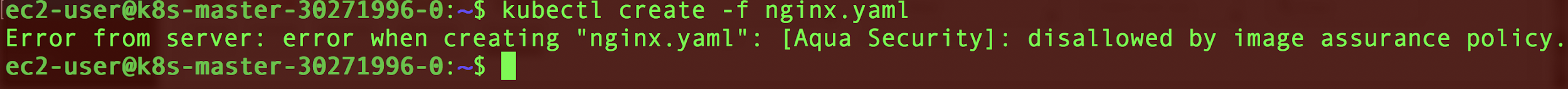

Aqua’s image assurance is one of our most sought-after features – it’s the ability to ensure that only approved images can run in your environment. Following your custom security policy, images can be failed based on the severity of vulnerabilities, over-provisioned privileges, CVSS scores, the presence of malware, embedded secrets, etc. Plus, any image that is not recognized by the system, i.e. did not go through this vetting process, will not be allowed to run. We’ve been doing this at the host level since Aqua CSP v1.0.

Now with Aqua 3.0, we’ve incorporated this capability at the K8S cluster level, by disallowing images on the Master Node. This means that for Kubernetes clusters we now have a layered defense using two choke points. It also improves the efficiency of our Aqua Enforcer, which is a lot less likely to encounter disallowed images – so instead of blocking a disallowed image on every node/host, we can now block it once for an entire cluster.

Kubectl create command blocked due to a disallowed nginx image

Kubectl create command blocked due to a disallowed nginx image

User Access Control at Scale

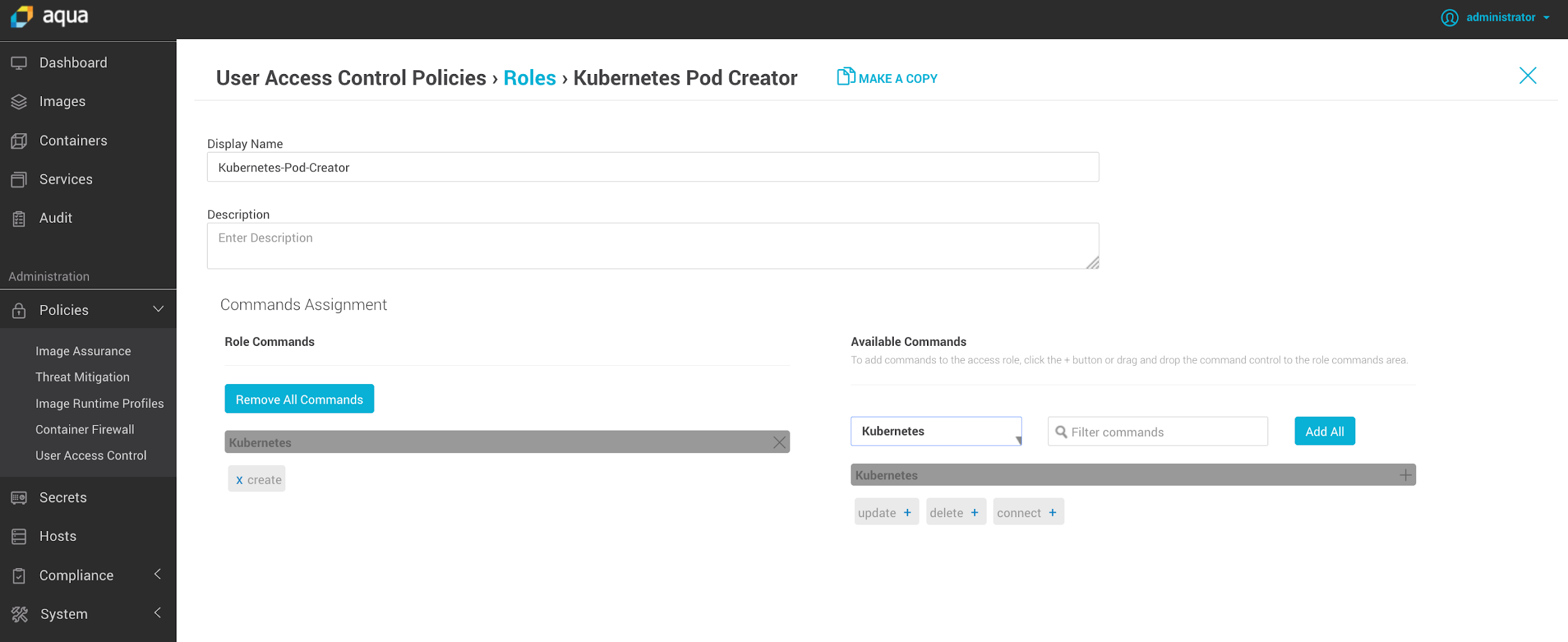

Kubernetes offers many options to govern user authorization and control access to different constructs of a cluster. However, security teams often need a more scalable, enterprise-wide way to create policies across applications teams, and enforce tighter controls. This is where Aqua steps in, with custom roles that can be applied across deployments, namespaces and clusters, and control access to kubectl commands in a granular way.

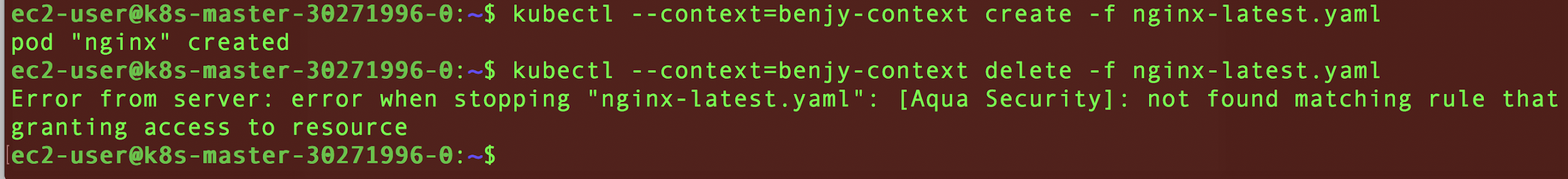

In the example below, we defined a role that permits the kubectl create command, but doesn’t permit the kubectl delete command, resulting in blocking the unauthorized command.

Granular custom roles to control user authorization to use kubectl commands

Runtime Protection

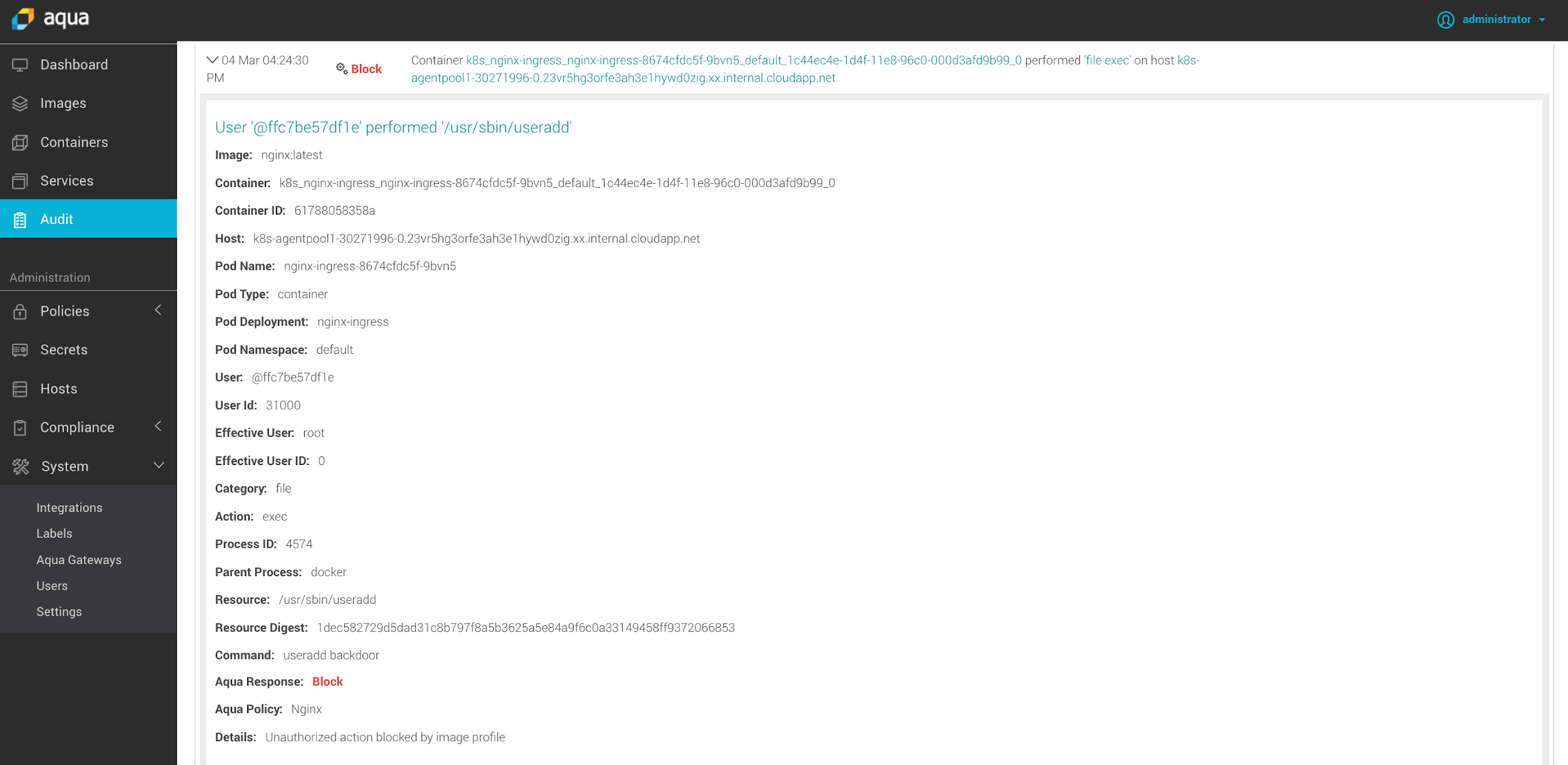

In Aqua you can create, both automatic (machine learned) as well as manual security profiles that white-list permitted container activities (such as file access, user namespace, executables, volumes, etc.). This ensures that containers only have the minimum privileges and capabilities they need to function, and blocks attempted attacks, even zero-days, by not permitting anything that is outside the scope of the policy.

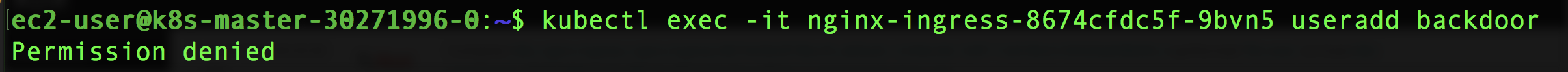

In the example below, someone tries to add a new user to a running container using the kubectl exec command. Since this is not permitted, the operation is denied, and the event is logged. Note the Kubernetes context provided, of pod name, type, deployment and namespace, in the logged info.

Blocking an attempt to add a user to a running container using kubectl exec

Container Firewall for Kubernetes

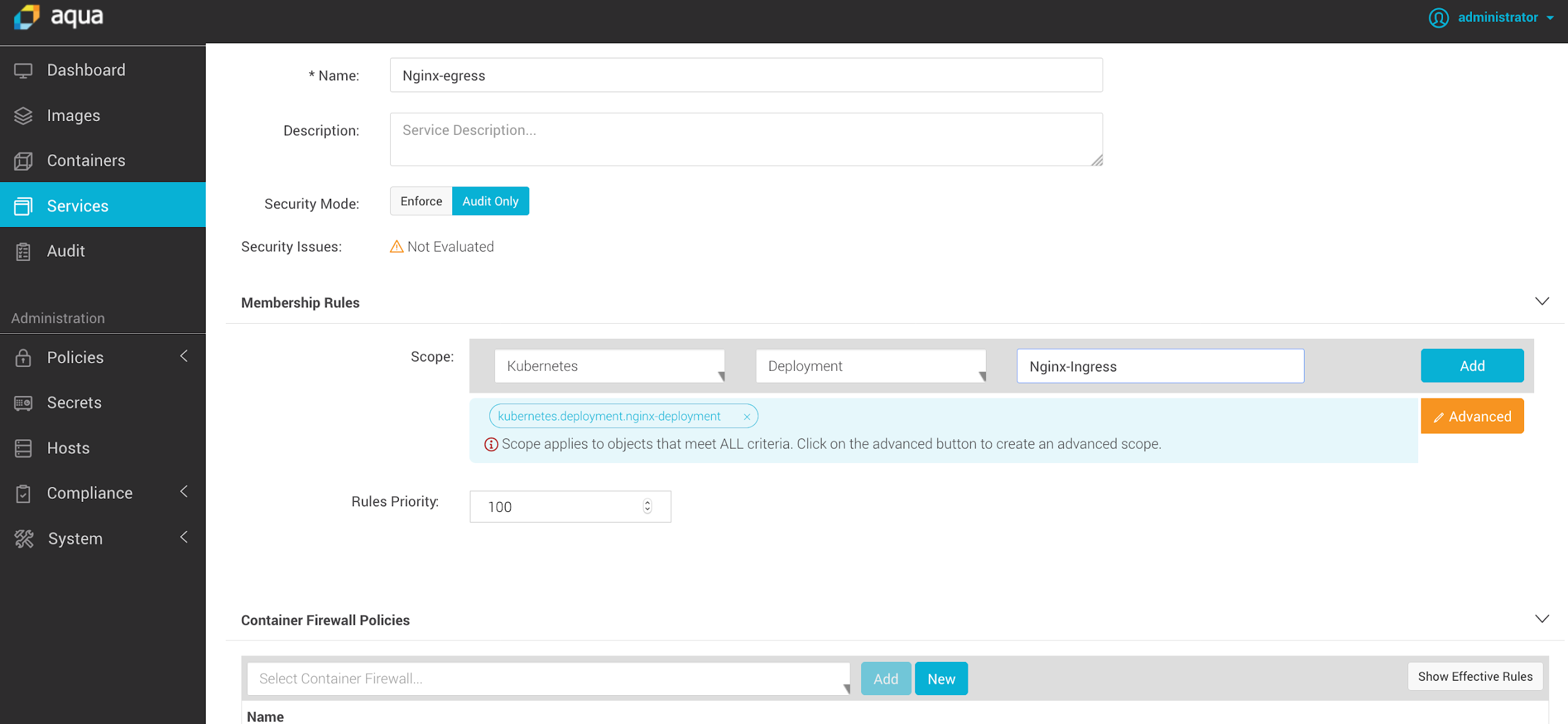

Aqua’s nano-segmentation functionality allows us to create inter-container firewall rules to enforce segmentation between applications and trust levels. With Kubernetes this may translate into segmentation between different clusters, different namespaces within the same cluster, or different deployments within the same namespace/cluster.

Aqua allows to define such scopes, and choose whether to activate them in Enforce mode (where it will block forbidden connections), or Audit mode (where it will only generate alerts/events).

Define network policy scope by Kubernetes Cluster, Namespace or Deployment

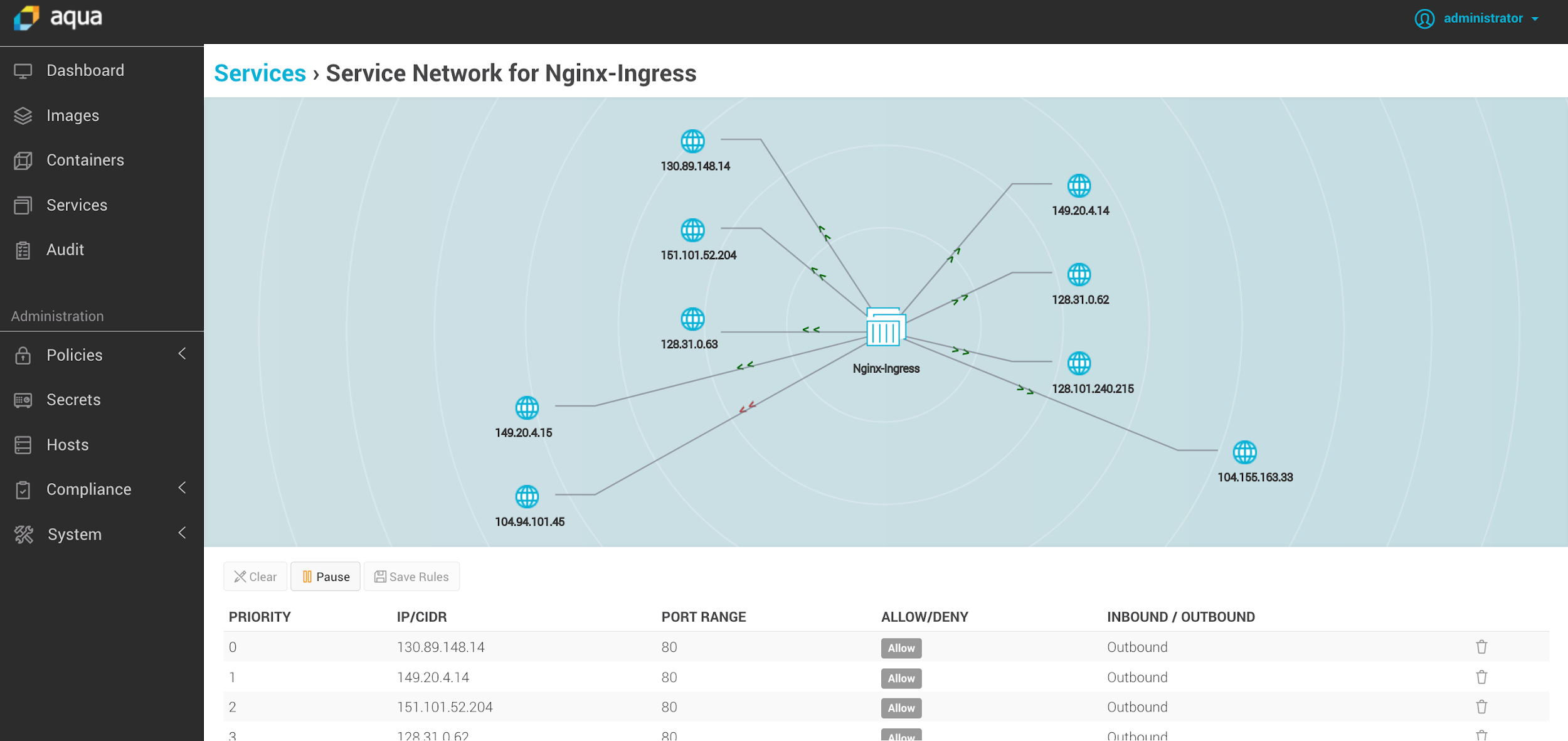

Aqua also provides visualization of the network connections learned during testing, and automatically suggests firewall rules to whitelist the connections, which will result in the policy being enforced according to the defined scope. The firewall operates at the container level, meaning that even if you run multiple containers per pod, enforcement will work at the most granular level possible.

Visualize network connections and automatically create firewall rules

To Learn More

With Aqua 3.0 we’ve introduced the most comprehensive enterprise-grade solution for securing Kubernetes, covering the entire lifecycle of cloud native applications. The Aqua Enforcer is itself deployed as a daemonset in Kubernetes, so it’s easy to deploy as part of your environment.