In the rapidly evolving world of artificial intelligence, the rise of Generative AI (GenAI) has sparked a revolution in how we interact with and leverage this technology. GenAI is based on large language models (LLMs) that have demonstrated remarkable capabilities, from generating human-like text to powering conversational interfaces and automating complex tasks.

Even though we are in the early stages of LLM adoption, the initial findings from our customers have found one out 4 customers build LLM-powered applications and around 20% of them are using OpenAI as their LLM. And according to a developer survey by Stack Overflow 70% of developers are using or are planning to use AI tools in their development process.

The adoption of LLM technology has introduced new capabilities for applications, empowering businesses to deliver personalized experiences and increase efficiencies with new and innovative capabilities. The question we ask is how does this affect the security and overall risk exposure of the application?

Integrating GenAI into cloud native environments

Building GenAI or LLM-powered applications offer substantial opportunities for innovation and efficiency, but it also introduces significant risks. As organizations deploy these advanced applications in cloud environments, they face new security challenges that expand their attack surface.

This initial adoption of GenAI has focused primarily on speed and innovation, often without full awareness of the implications for security. Yet to respond to threats effectively, even the ones that may not be fully understood yet, a robust security strategy integrating technological safeguards and governance frameworks is essential. This is why OWASP created the Top 10 list for LLM applications, to provide guidelines for LLM-powered application security.

The OWASP framework helps identify risk

The OWASP framework, adapted for Large Language Models (LLMs), addresses critical security risks in GenAI technologies to help businesses navigate the complexities of LLM-powered application development and deployment to safeguard them against malicious exploits.

Three key areas of focus within the OWASP Top 10 for LLMs that include Prompt Injection, Insecure LLM Interaction, and Data Access. But how do these specifically affect cloud native applications and what should teams be concerned about from these techniques and activities?

- Prompt Injection – This is a new attack technique specific to LLMs, where attackers craft inputs designed to mislead or manipulate the model into generating unintended or harmful responses. This technique exploits the model’s reliance on input prompts for generating outputs, allowing attackers to insert malicious instructions or context within these prompts.

- What’s the risk: Prompt injection risks include attackers manipulating LLM outputs, leading to unauthorized actions or data breaches, compromising system security and integrity.

- Insecure LLM Interaction – As LLMs are interacting with other systems, there’s a risk that their outputs could be leveraged for malicious activities, such as executing unauthorized code or initiating cybersecurity attacks. Furthermore, overly permissive LLMs may amplify this risk and could potentially cause more damage.

- What’s the risk: The risk of Insecure LLM Interactions lies in the exposure of systems to unauthorized access or data leaks, which can compromise both security and data integrity.

- Data access – the LLMs stores all the data it consumes, in the case data leakage occurs when sensitive information is unintentionally exposed or been accessed by unauthorized person through the model’s output, compromising privacy and security.

- What’s the risk: The risk associated with improper data access controls is significant, as it can lead to unauthorized data exposure or breaches, thereby jeopardizing both privacy and security. Proper controls are necessary to mitigate this risk and ensure the security of sensitive data processed by LLMs.

These risks highlight the potential for malicious exploitation and data leakage, emphasizing the need for proactive risk management and why we have enhanced our platform, allowing for the prevention and detection of GenAI-related attacks and tracing them back to specific lines of code.

Securing LLM-powered applications with Aqua

Aqua facilitates secure application development and runtime protection by addressing vulnerabilities outlined in the OWASP Top 10 for LLM applications. By securing applications early in the development cycle, Aqua prevents potential security breaches and reputational damage. Additionally, Aqua’s runtime protection capabilities enable swift identification and remediation of LLM-related issues, empowering development teams to maintain code integrity and security.

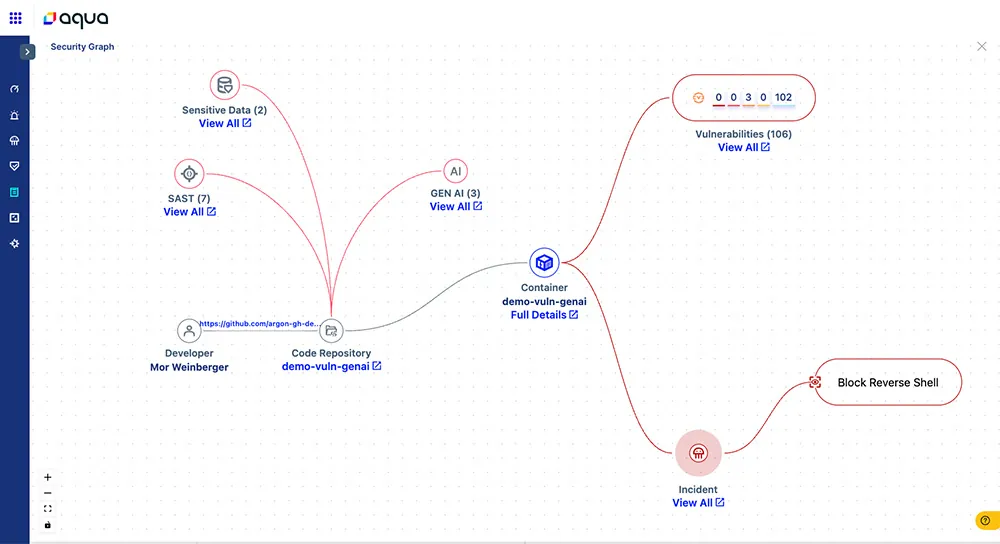

Figure 1: Tracing GenAI incidents form code to cloud with Aqua

Our solution encompasses a multifaceted approach to LLM security that includes:

- Code Integrity: Aqua Security employs advanced code scanning technology to identify and mitigate unsafe use of LLM in your application code, including unauthorized data access, misconfigurations, and vulnerabilities specific to LLM-powered applications. For example, Aqua can detect unauthorized access of LLM outputs which may try to execute malicious code and initiate attacks.

- Real-Time Monitoring: Aqua Security’s runtime protection capabilities actively monitor LLM-powered application workloads and prevents unauthorized actions that LLMs might attempt, such as executing malicious code due to prompt injection attacks.

- GenAI Assurance Policies: Aqua Security employs specific GenAI assurance policies that serve as guardrails for developers of LLM-powered applications. These policies prevent unsafe usage of LLMs and are based on practices from the OWASP Top 10 for LLMs and other recognized industry standards.

These new capabilities seamlessly integrate into our broader cloud native application protection platform (CNAPP), providing a unified solution for holistic protection across the entire cloud native application lifecycle. The Aqua solution equips security teams, DevOps practitioners, and compliance professionals with the tools and expertise needed to navigate the evolving landscape of LLM security.

Businesses and industries eager to embrace the transformative potential of GenAI can bridge the gap between security requirements and LLM risks, and enable their organizations to embrace innovation while mitigating potential risks.

If you want to learn more about how Aqua’s protecting LLM-based applications, please visit us at RSA, booth #1835 in the South Hall.